Readers:

In Part 3 of this series on data blending, we examining the benefits of blending data. We also reviewed an example of data blending that illustrated the possible outcomes of an election for the District 2 Supervisor of San Francisco.

Today, in Part 4 of this series, we will discuss data blending design principles and show another illustrative example of data blending using Tableau.

Again, much of Parts 1, 2, 3 and 4 are based on a research paper written by Kristi Morton from The University of Washington (and others) [1].

You can learn more about Ms. Morton’s research as well as other resources used to create this blog post by referring to the References at the end of the blog post.

Best Regards,

Michael

Data Blending Design Principles

In Part 3, we describe the primary design principles upon which Tableau’s data blending feature was based. These principles were influenced by the application needs of Tableau’s end-user. In particular, we designed the blending system to be able to integrate datasets on-the-fly, be responsive to change, and driven by the visualization. Additionally, we assumed that the user may not know exactly what she is looking for initially, and needs a flexible, interactive system that can handle exploratory visual analysis.

Push Computation to Data and Minimize Data Movement

Tableau’s approach to data visualization allows users to leverage the power of a fast database system. Tableau’s VizQL algebra is a declarative language for succinctly describing visual representations of data and analytics operations on the data. Tableau compiles the VizQL declarative formalism representing a visual specification into SQL or MDX and pushes this computation close to the data, where the fast database system handles computationally intensive aggregation and filtering operations. In response, the database provides a relatively small result set for Tableau to render. This is an important factor in Tableau’s choice of post-aggregate data integration across disparate data sources – since the integrated result sets must represent a cognitively manageable amount of information, the data integration process operates on small amounts of aggregated, filtered data from each data source. This approach avoids the costly migration effort to collocate massive data sets in a single warehouse, and continues to leverage fast databases for performing expensive queries close to the data.

Automate as Much as Possible, but Keep User in Loop

Tableau’s primary focus has been on ease of use since most of Tableau’s end-users are not database experts, but range from a variety of domains and disciplines: business analysts, journalists, scientists, students, etc. This lead them to take a simple, pay-as-you-go integration approach in which the user invests minimal upfront effort or time to receive the benefits of the system. For example, the data blending system does not require the user to specify schemas for their data sets, rather the system tries to infer this information as well as how to apply schema matching techniques to blend them for a given visualization. Furthermore, the system provides a simple drag-and-drop interface for the user to specify the fields for a visualization, and if there are fields from multiple data sources in play at the same time, the blending system infers how to join them to satisfy the needs of the visualization.

In the case that something goes wrong, for example, if the schema matching could not succeed, the blending system provides a simple interface for specifying data source relationships and how blending should proceed. Additionally, the system provides several techniques for managing the impact of dirty data on blending, which we discuss in more in Part 5 of this series.

Another Example: Patient Falls Dashboard [3]

NOTE: The following example is from Jonathan Drummey via the Drawing with Numbers blog site. The example uses Tableau v7, but at the end of the instructions on how he creates this dashboard in Tableau v7, Mr. Drummey includes instructions how the steps became more simplied in Tableau v8. I have included a reference to this blog post on his site in the reference section of my blog entry. The “I”, “me” voice you read in this example is that of Mr. Drummey.

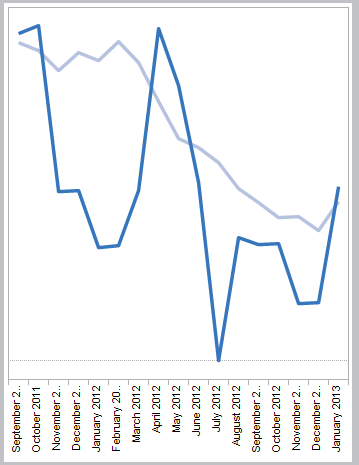

As part of improving patient safety, we track all patient falls in our healthcare system, and the number of patient days – the total of the number of days of inpatient stays at the hospital. Every month report we report to the state our “fall rate,” a metric of the number of falls with injury for certain units in the hospital per 1000 patient days, i.e. days that patients are at the hospital. Our annualized target is to have less than 0.7 falls with injury per 1000 patient days.

A goal for our internal dashboard is to show the last 13 months of fall rates as a line chart, with the most recent fall events as a bar chart, in a combined chart, along with a separate text table showing some details of each fall event. Here’s the desired chart, with mocked-up data:

![combo bars and lines]()

On the surface, blending this data seems really straightforward. We generate a falls rate very month for every reporting unit, so use that as the primary, then blend in the falls as they happen. However, this has the following issues:

- Sparse Data – As I’m writing this, it’s March 7th. We usually don’t get the denominator of the patient days for the prior month (February) for a few more days yet, so there won’t be any February row of measure data to use as the primary to get the February fall events to show on the dashboard. In addition, there still wouldn’t be any March data to get the March fall events. Sometimes when working with blend, the solution is to flip our choices for the primary and secondary datasource. However, that doesn’t work either because a unit might go for months or years without a patient fall, so there wouldn’t be any fall events to blend in the measure data.

- Falls With and Without Injury – In the bar chart, we don’t just want to show the number of patient falls, we want to break down the falls by whether or not they were falls with injury – the numerator for the fall rate metric – and all other falls. The goal of displaying that data is to help the user keep in mind that as important as it is to reduce the number of falls with injury, we also need to keep the overall number of falls down as well. No fall = no chance of fall with injury.

- Unit Level of Detail – Because the blend needs to work at the per-unit level of detail as well as across all reporting units, that means (in version 7 at least) that the Unit needs to be in the view for the blend to work. But we want to display a single falls rate no matter how many units are selected.

Sparse Data

To deal with issue of sparse data, there are a few possible solutions:

- Change the combined line and bar chart into separate charts. This would perhaps be the easiest, though it would require some messing about with filters, hidden reference lines, and continuous date axes to ensure that the two charts had similar axis ranges no matter what. However, that would miss out on the key capability of the combined chart to directly see how a fall contributes to the fall rate. In addition, there would be no reason to write this blog post.

![:)]()

- Perform padding in the data source, either via a query/view or Custom SQL. In an earlier version of this project I’d built this, and maintaining a bunch of queries with Cartesian joins isn’t my favorite cup of tea.

- Building a scaffold data source with all combinations of the month and unit and using the scaffold as the primary data source. While possible, this introduces maintenance issues when there’s a need for additional fields at a finer level of detail. For example, the falls measure actually has three separate fall rates – monthly, quarterly, and annual. These are generated as separate rows in our measures data and the particular duration is indicated by the Period field. So the scaffold source would have to include the Period field to get the data, but then that could be too much detail for the blended fall event data, and make for more complexity in the calculations to make sure the aggregations worked properly.

- Do a tiny bit of padding in the query, then do the rest in Tableau via Show Missing Values aka domain padding. As I’d noted in an earlier post on blending, domain padding occurs before data is blended so we can pad out the measure data through the current date and then include all the falls. This is the technique I chose, for the reason that padding one row to the data is trivial and turning on Show Missing Values is a couple of mouse clicks. Here’s how I did that:

In my case, the primary data source is a Microsoft Access query that gets the falls measure results from a table that also holds results for hundreds of other metrics that we track. I created a second query with the same number of columns that returns Null for every field except the Measure Date, which has a value of 1/1/1900. Then a third query UNION’s those two queries together, and that’s what is used as the data source in Tableau.

Then, in Tableau, I added a calculated field called Date with the following formula:

//used for padding out display to today

IF [Measure Date] == #1/1/1900# THEN

TODAY()

ELSE

[Measure Date]

END

The measure results data contains a row per measure, reporting unit, and the period. These are pre-calculated because the data is used in a variety of different outputs. Since in this dashboard we are combining the results across units, we can’t just use the rate, we need to go back to the original numerator and denominator. So, I also created a new field for the Calculated Rate:

SUM([Numerator])/SUM([Denominator])

Now it’s possible to start building the line chart view:

- Put the Month(Date) – the full month/year version as a discrete – on Columns, Calculated Rate on Rows, Period on the Color Shelf. This only shows the data that exists in the data source, including the empty value for the current month (March in this case):

![Screen Shot 2013-03-09 at 1.11.25 PM]()

- Turn on Show Missing Values for Month(Date) to start domain padding. Now we can see the additional column(s) for the month(s) – February in this case between January to the current month that Tableau has added in:

![Screen Shot 2013-03-09 at 1.14.19 PM]()

With a continuous (green pill) date, this particular set-up won’t work in version 8. Tableau’s domain padding is not triggered when the last value of the measure is Null. I’m hoping this is just an issue with the beta, I’ll revise this section with an update once I find out what’s going on.

Even though the measure data only has end of month dates, instead of using Exact Date for the month I used Month(Date) because of two combined factors: One is that the default import of most date fields from MS Jet sources turns them into DateTime fields, the second is that Show Missing Values won’t work on an Exact Date for a DateTime field, you have to assign an aggregation to a DateTime (even Second will work). This is because domain padding at this level can create an immense number of new rows and cause Tableau to run out of memory, so Tableau keeps the option off unless you want it. Also note that you can turn on Show Missing Values for an Exact Date for a Date Field.

- Now for some cleanup steps: for the purposes of this dashboard, filter Period to remove Monthly (we do quarterly reporting), but leave in Null because that’s needed for the domain padding.

- Right-click Null on the Color Legend and Hide it. Again, we don’t exclude this because this would cause the extra row for the domain padding to fail.

- Set up a relative date filter on the Date field for the last 13 months. This filter works just fine with the domain padding.

Filtering on Unit

Here’s a complicating factor: If we add a filter on Unit, there’s a Null listed here:

![Screen Shot 2013-03-09 at 1.18.31 PM]()

I’d just want to see the list of units. But if we filter that Null out, then we lose the domain padding, the last date is now January 2013:

![Screen Shot 2013-03-09 at 1.18.58 PM]()

One solution here would be to alter the padding to add a padding row for every unit, instead of just one unit. Since Tableau doesn’t let us just hide elements in a filter, and we actually have more reporting units in our data than we are displaying on the dashboards, I chose to use a parameter filter because there are more reporting units in our production data than we are displaying on the dashboards, yet the all-unit rate needs to include all of the data. Setting this up included a parameter with All and each of the units, and a calculated field called “Chosen Unit Filter” with the following formula, that is set to Filter on False:

[Choose Unit] == "All" OR [Choose Unit] == [Unit]

Falls With and Without Injury

In a fantasy world, to create the desired stacked bars I’d be able to drag the Number of Records from the secondary datasource, i.e. the number of fall events, drag an Injury indicator onto the Color Shelf, and be done. However, that runs into the issue of having a finer level of detail in the secondary than in the primary, which I’ll walk through solutions for in the next section. In this case, since there are only two different numbers, the easy way is to generate two separate measures, then use Measure Names/Measure Values to create the stacked bars – Measure Values on Rows, and Measure Names on the Color Shelf. Here’s the basic calculation for Falls with Injury:

SUM(IF [Injury] != "None" THEN 1 ELSE 0 END)

We’re using a row-level calculated field to generate the measure, and a slightly different calc for Falls w/out Injury.

Unit Level of Detail

When we want to blend in Tableau at a finer level of detail and aggregate to a higher level, historically there have been three options:

- Don’t use blending at all, instead use a query to perform the “blend” outside of Tableau. In the case that there are totally different data sources, this can be more difficult but not impossible by using one of the systems or a different system to create a federated data source, for example by adding your Oracle table as an ODBC connection to your Excel data, then making the query on that. In this case, we don’t have to do that.

- Use Tableau’s Primary Groups feature “push” the detail from the secondary into the primary data source. This is a really helpful feature, the one drawback is that it’s not dynamic so any time there are new groupings in the secondary it would have to be re-run. Personally, I prefer automating as much as possible so I tend not to use this technique.

- Set up the view with the needed dimensions in the view – on the Level of Detail Shelf, for example – and then use table calculations to do the aggregation. This is how I’ve typically built this kind of view.

Tableau version 8 adds a fourth option:

- Tell Tableau what fields to blend on, then bring in your measures from the secondary.

I’ll walk through the table calculation technique, which works the same in version 7 and version 8, and then how to take advantage of v8′s new feature.

Using Table Calculations to Aggregate Blended Data

In order to blend the the falls data at the hospital unit level to make sure that we’re only showing falls for the selected unit(s), the Unit has to be in the view (on the Rows, Columns, or Pages Shelves, or on the Marks Card). Since we don’t actually need to display the Unit, the Level of Detail Shelf is where we’ll put that dimension. However, just adding that to the view leads to a bar for each unit, for example for April 2012 one unit had one fall with injury and another had two, and two units each had two falls without injury.

![Screen Shot 2013-03-09 at 1.30.27 PM]()

To control things like tooltips (along with performance in some cases), it’s a lot easier to have a single bar for each month/measure. To do that, we turn to a table calculation, here’s the Falls w/Injury for v7 Blend calculated field, set up in the secondary data source:

IF FIRST()==0 THEN

TOTAL([Falls w/Injury])

END

This table calculation has a Compute Using of Unit, so it partitions on the Month of Date. The IF FIRST()==0 part ensures that there is only one mark per partition. I’m using the TOTAL() aggregation here because it’s easier to set up and maintain. The alternative is to use WINDOW_SUM(), but in Tableau prior to version 7 there are some performance issues, so the calc would be:

IF FIRST()==0 THEN

WINDOW_SUM(SUM(Falls w/Injury]), 0, IIF(FIRST()==0,LAST(),0))

END

The ,0 IIF(FIRST()==0,LAST(),0 part is necessary in version 7 to optimize performance, you can get rid of that in version 8.

You can also do a table calculation in the primary that accesses fields in the secondary, however TOTAL() can’t be used across blended data sources, so you’d have to use the WINDOW_SUM version.

With a second table calculation for the Falls w/out Injury, now the view can be built, starting with the line chart from above:

- Add Measure Names (from the Primary) to Filters Shelf, filter it for a couple of random measures.

- Put Measure Values on the Rows Shelf.

- Click on the Measure Values pill on Rows to set the Mark Type to Bar.

- Drag Measure Names onto the Color Shelf (for the Measure Values marks).

- Drag Unit onto the Level of Detail Shelf (for the Measure Values marks).

- Switch to the Secondary to put the two Falls for v7 Blend calcs onto the Measure Values Shelf.

- Set their Compute Usings to Unit.

- Remove the 2 measures chosen in step 1.

- Clean up the view – turn on dual axes, move the secondary axis marks to the back, change the axis tick marks to integers, set axis titles, etc.

This is pretty cool, we’re using domain padding to fill in for non-existent data and then having a blend happening at one level of detail while aggregating to another, just for the second axis. Here’s the v7 workbook on Tableau Public:

![Patient Falls Dashboard - Click on Image to go to Tableau Public]()

Patient Falls Dashboard – Click on image above to go to Tableau Public

Tableau Version 8 Blending – Faster, Easier, Better

For version 8, Tableau made it possible to blend data without requiring the linking fields in the view. Here’s how I build the above v7 view in v8:

- Add Measure Names (from the Primary) to Filters Shelf, filter it for a couple of random measures.

- Put Measure Values on the Rows Shelf.

- Click on the Measure Values pill on Rows to set the Mark Type to Bar.

- Drag Measure Names onto the Color Shelf (for the Measure Values marks).

- Switch to the Secondary and click the chain link icon next to Unit to turn on blending on Unit.

- Drag the Falls w/Injury and Falls w/out Injury calcs onto the Measure Values Shelf.

- Remove the 2 measures chosen in step 1.

- Clean up the view – turn on dual axes, move the secondary axis marks to the back, change the axis tick marks to integers, set axis titles, etc.

The results will be the same as v7.

Next: Tableau’s Data Blending Architecture

—————————————————————-

References:

[1] Kristi Morton, Ross Bunker, Jock Mackinlay, Robert Morton, and Chris Stolte, Dynamic Workload Driven Data Integration in Tableau, University of Washington and Tableau Software, Seattle, Washington, March 2012, http://homes.cs.washington.edu/~kmorton/modi221-mortonA.pdf.

[2] Hans Rosling, Wealth & Health of Nations, Gapminder.org, http://www.gapminder.org/world/.

[3] Jonathan Drummey, Tableau Data Blending, Sparse Data, Multiple Levels of Granularity, and Improvements in Version 8, Drawing with Numbers, March 11, 2013, http://drawingwithnumbers.artisart.org/tableau-data-blending-sparse-data-multiple-levels-of-granularity-and-improvements-in-version-8/.

Filed under:

Data Blending,

Kristi Morton,

MicroStrategy,

Tableau ![]()

![]()

![[Click image to watch video presentation]](http://datavizblog.files.wordpress.com/2013/07/michael-j-saylor.jpg?w=620)

![[Click Here to See MicroStrategy Analytics in Action] MicroStrategy Analytics](http://datavizblog.files.wordpress.com/2014/01/microstrategy-analytics.jpg?w=620)

With the release of MicroStrategy Analytics Enterprise 9.4.1, the Analytical Engine logic has been enhanced with respect to joining data from multiple datasets in a Report Services Document. One of the features that is available with this release is the ability to use objects (e.g., attributes, metrics) from multiple datasets in a single grid in a document.

With the release of MicroStrategy Analytics Enterprise 9.4.1, the Analytical Engine logic has been enhanced with respect to joining data from multiple datasets in a Report Services Document. One of the features that is available with this release is the ability to use objects (e.g., attributes, metrics) from multiple datasets in a single grid in a document.

Within the worldwide Cognos community, when you ask someone who to turn to about some special trick or complex feature you need to implement, the first name that comes out is

Within the worldwide Cognos community, when you ask someone who to turn to about some special trick or complex feature you need to implement, the first name that comes out is